The Case for Downranking Fear Speech

There's more of it than hate speech, and it's linked to violence - BCB #166

If you were at all online two weeks ago you probably saw stuff like this:

Compromise is dead. The Left doesn’t want debate—they want to erase us. From campus purges to Big Tech censorship to the assassination of Charlie Kirk, the evidence is clear.

The Left is declaring if you speak against them. it’s ok for them to KILL you. No 1st amendment rights in their world. They want us ALL dead. This isn’t hyperbolic, it’s real life.

Much has been said about moderating “hate speech” in online spaces. Probably too much. It’s become a focal point of arguments over the politics of speech law. Some definitions explicitly protect “political orientation” or “ideology” though most don’t.

But if the goal is reducing violence, all of this may be beside the point.

Instead of “hate speech” which has a slippery and endlessly contested definition, “dangerous speech” has a very simple definition:

Dangerous speech is any form of expression (including speech, text, or images) that can increase the risk that its audience will condone or participate in violence against members of another group.

Will posts like the ones above increase the likelihood of violent retaliation? Quite likely, according to dangerous speech researchers. After all, if the other side is a genuine threat, it may be better to attack them first — that would be self-defense.

(Just to be clear, yes I absolutely do think there is a reciprocal problem when folks on the left express this same sort of totalizing fear of people on the right.)

Fear Speech is more common than Hate Speech

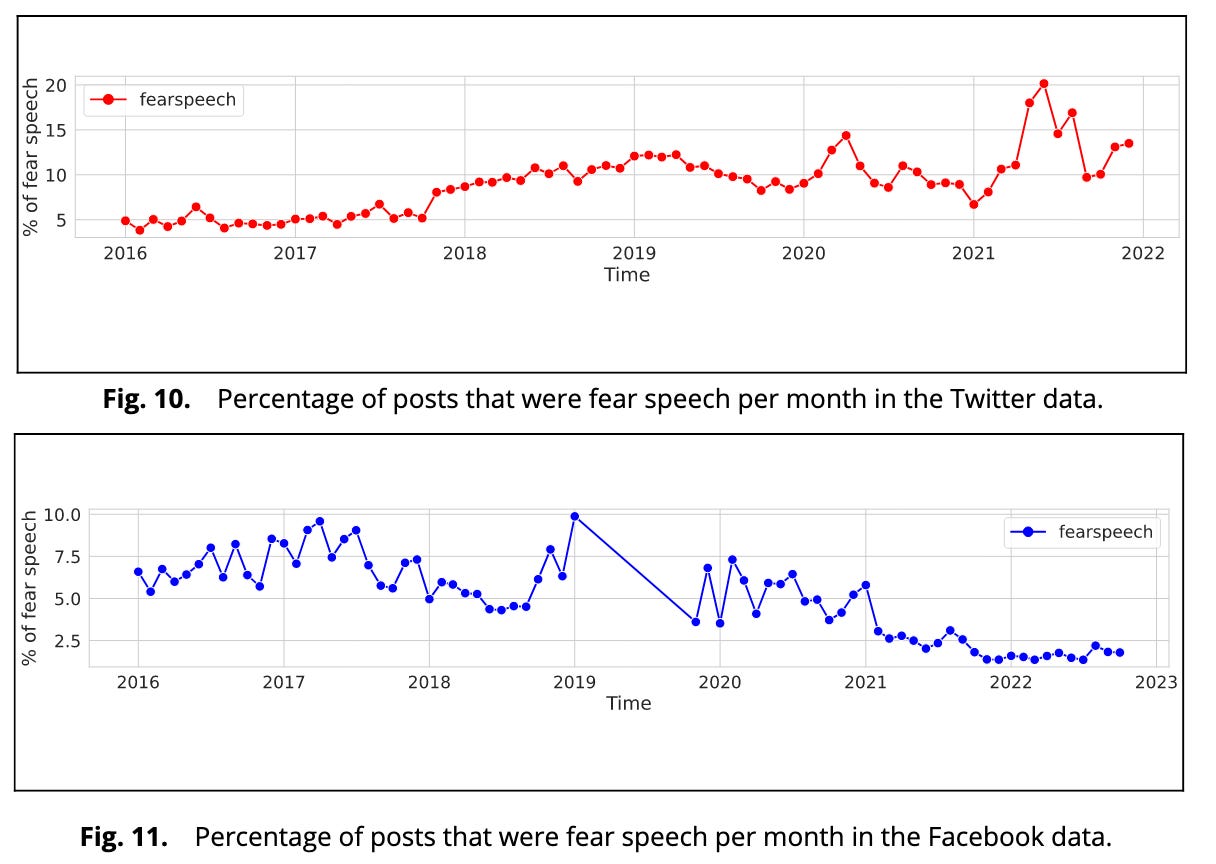

A 2021 study by Punyajoy Saha and collaborators examined the rise of fear speech, that is, speech which “attempts to incite fear about a target community.” While “hate speech” made up only a fraction of a percent of all the posts sampled, fear speech was massively more common, sometimes 10% of all posts:

This is dangerous because, as the study authors write, “existential fear can bias peaceful people toward extremism,” citing previous experiments.

And yet, it’s completely reasonable to worry about the motivations of them if they’ve just killed one of us. (Though there’s always an argument about group vs. individual blame in the wake of an atrocity.) Sharing information about threats is part of what keeps us safe. It’s normal and often healthy human behavior.

The problem is that modern media lets us hear an entire country’s fear all at once, distorting our perceptions.

Just being able to see thousands of people express their fear at once is distorting, because we aren’t also seeing the millions more who don’t feel as threatened. And as we have covered many times, both social media and traditional media tend to preferentially amplify more extreme content and speakers.

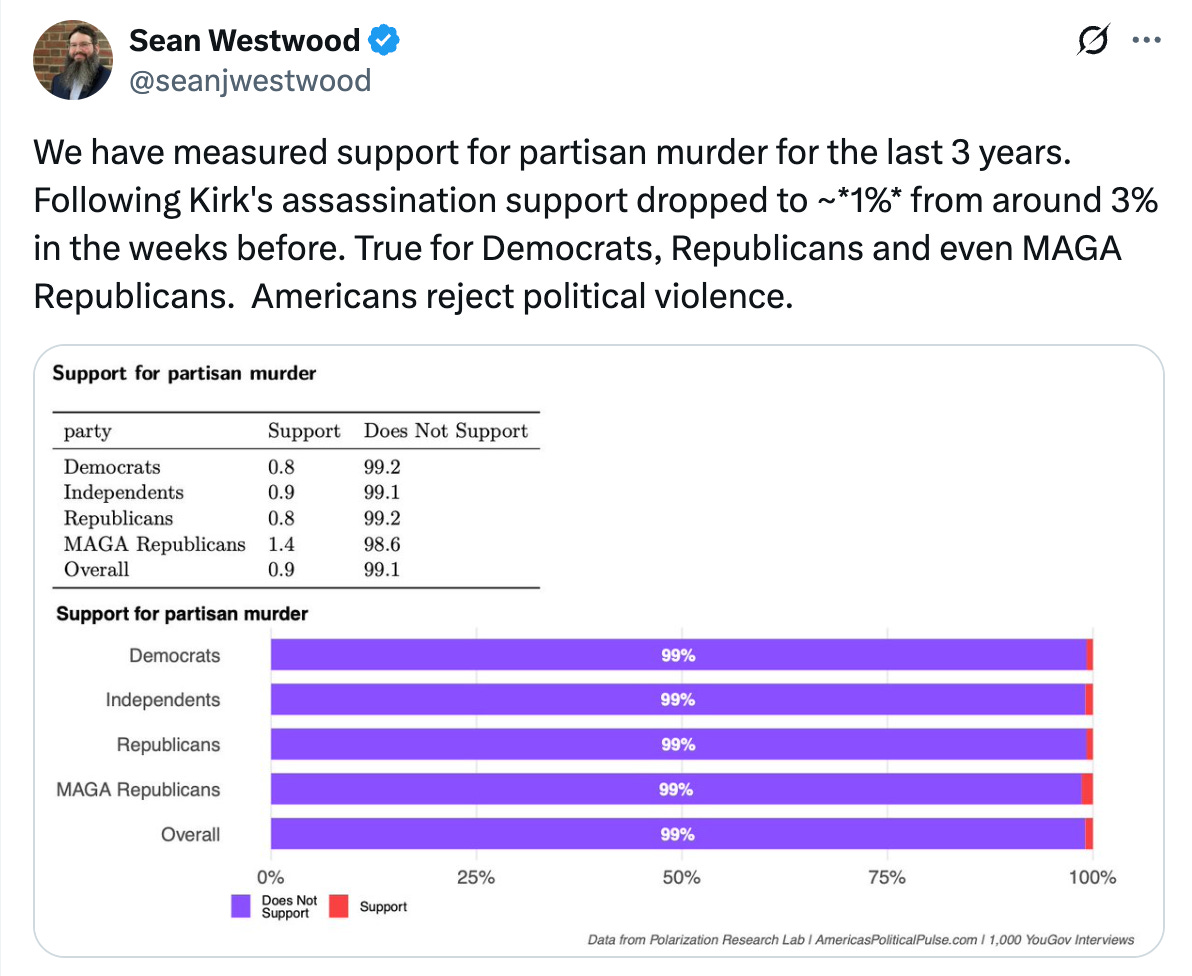

One consequence is that we all — on both sides — dramatically overestimate the other side’s support for political violence. Both my own haphazard post-Kirk social media sample and far more careful work show that only about 2% of Americans would support the murder of a political opponent. (Random surveys showing much higher numbers are probably not trustworthy, because of inattentive answering and unclear questions.) In fact, early results suggest that Kirk’s assassination actually reduced support for violence:

Rightsizing Fear Speech

Eliminating fear speech is unreasonable, probably even harmful to public safety. It would also raise all of the usual freedom of expression concerns (and regular readers will know we care deeply about this). But in this case our media system is distorting our perceptions, a funhouse mirror. Can we correct our view?

This would involve reducing the distribution of fear speech in some way. Machine learning is getting better and better at parsing subtle meaning, so it seems well within the realm of possibility to automatically identify fear speech online. Of course, one could simply reduce its score in platform ranking systems. But there may be a better way.

Fear speech and other kinds of extreme content are over-represented on social media in large part because standard ranking by engagement prioritizes the posts that people read, watch, like and share. And like a car crash, we all pay more attention to threats — again, this is a normal and often healthy social instinct. It would be possible to break the link between engagement and distribution for fear speech specifically. In fact, several platforms already do this for other sensitive topics, including health information and political content, as my colleagues and I have previously reported:

In 2021, Facebook adjusted engagement weights for ranking political (“civic”) content: “We take away all weight by which we uprank a post based on our prediction that someone will comment on it or share it.” Two other platforms attending our workshop also reported using modified ranking for civic and health content.

While it would certainly be possible to broadly downrank or even entirely remove fear speech, removing engagement from the ranking algorithm is a more subtle and natural intervention. It would get us closer to the social dynamics that we evolved with: before peer-to-peer mass communication, fear wasn’t able to spread virally across a nation. We can’t live without expressions of fear, but we can restore balance in the way our technology handles them.

Thread of the Week

This is a remarkable illustrated history of the rise of American polarization over the last decade.

Not North vs South. Not Red vs Blue. It’s a 10-year trick that made neighbors fight. We’ll walk the timeline like a comic: who pushed what, how our brains got hacked, and how to beat it. Welcome to THE FAKE CIVIL WAR. Buckle up.