What kind of politics would Musk's “TruthGPT” have? - BCB #48

Also: how to tackle the political divide together, and America is ranked as “severely polarized”

Elon Musk wants to create an AI that’s not as politically correct as ChatGPT

Elon Musk was recently on Fox News’ “Tucker Carlson Tonight” to say he wants to launch his own generative AI called “TruthGPT,” an alternative to large language models (LLMs) like ChatGPT that he claims are trained to be too politically correct, and therefore untruthful. ChatGPT definitely has Blue politics, but that doesn’t mean it’s clear what politics a chatbot should have.

Musk didn’t give examples of how political correctness is untruthful, but this claim is a common Red trope. And certainly Blue has its blindspots — we previously discussed the way Jon Stewart was chastised by his Blue supporters for even considering the possibility of a Wuhan lab leak. It’s also not clear that ChatGPT is any more untruthful about politics than anything else, as LLMs routinely “hallucinate” — make things up — when unsure of the answer.

On the other hand, the evidence is accumulating that ChatGPT really does have staunchly Blue political sensibilities. We’ve discussed how ChatGPT scores Blue on political tests. A more comprehensive recent study by researchers at Cornell University aimed to identify whose opinions LLMs actually represent, by comparing LLM output to opinion polls across 60 US demographics. It turns out the LLMs do not represent various demographics in its answers, including people above 65 years of age, widowed women, and highly religious people.

The study also found that LLMs caricature the views of liberals and moderates, such as claiming that Biden has a 99% approval rating compared to the actual approval rating of 40% at the time of writing.

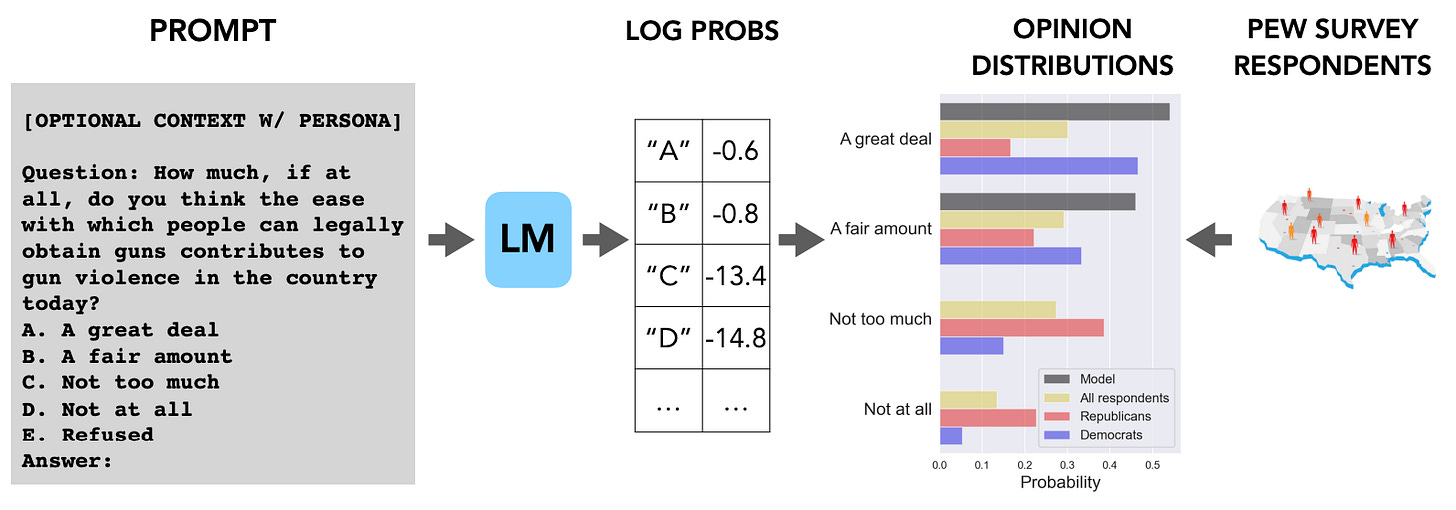

These researchers compared the output of a variety of AI language models from different companies to opinion polling across different topics and demographics. In the diagrams below, the topics are listed down the left while the models are listed across the bottom. Generally, the older and less sophisticated models are at left, while more recent and complex models are to the right. The free version of ChatGPT uses a variant of the “text-davinci-003” model in the rightmost column, sometimes known as GPT 3.5, and this model scores very Blue (GPT 4 was not tested).

It’s not just political ideology — later models most closely match the opinions of people making $100,000 or more, with post-graduate degrees.

We’ve speculated on the reason for ChatGPT’s Blue tendencies before. It could be that OpenAI must appeal to the sensibilities of the Blue professional class, as the negative opinions of journalists and academics might be most damaging to their reputation. Certainly, they’ve put a lot of work into ensuring the bot never says anything racist or sexist (though without complete success, as no one really knows how to fully control these massive AI models). The model’s opinions could also be driven by its training data; for example, online journalism tends to have a very Blue, upper-middle-class orientation.

The bigger question here is: exactly whose political views should a chatbot represent? One could argue that its opinions should match the median voter, but this won’t necessarily mean it endorses smart policies. It might end up with just the same blind spots as the hypothetical average American. Or, one could argue that we should have a diversity of bots with different opinions — in which case creating TruthGPT makes a kind of sense.

The political divide can be tackled together

We previously covered Peter Coleman and Pearce Godwin’s Political Courage Challenge and how to overcome tribal groupthink. In this final piece in their series for Time, they describe how family, friends, and neighbors feel separated from one another due to the political divide — a pertinent topic from one of our first issues. Here’s what they suggest we need to do to start reconnecting:

As much as we love blaming others, it’s an addiction that Americans must overcome. People who score higher on the Blame Intensity Inventory survey get more satisfaction watching the other side suffer when they believe they are blameworthy for something.

Simply enjoying the outdoors and being in nature with people from across the aisle has proven to create feelings of empathy, especially when coupled with physical exercises like marching bands, sports teams, or dance groups.

Working on dismantling the incentives that drive polarization isn’t easy, as they are deeply rooted in mainstream society,

The business models of most major social media platforms that intentionally sort us into partisan tribes and prey on our sense of outrage for profit; the politicization and entertainmentization of many of our large news media outlets that seem to value attention-grabbing and market share over accurate, ethical reporting; the high-stakes, winner-takes-all forms of partisan politics that result in a cult-like ‘party over country’ mentality; and the devastating effects of decades of runaway inequality in America that has left countless citizens feeling excluded, abandoned, ridiculed, and so enraged that they’d rather tear down our institutions than try to reform them. We will never escape the grip of such a system of forces through civil conversation alone.

But there is hope! Coleman and Godwin remind us of the 8000 bridge-building organizations across the US that aim to solve bipartisan issues, including us at BCB.

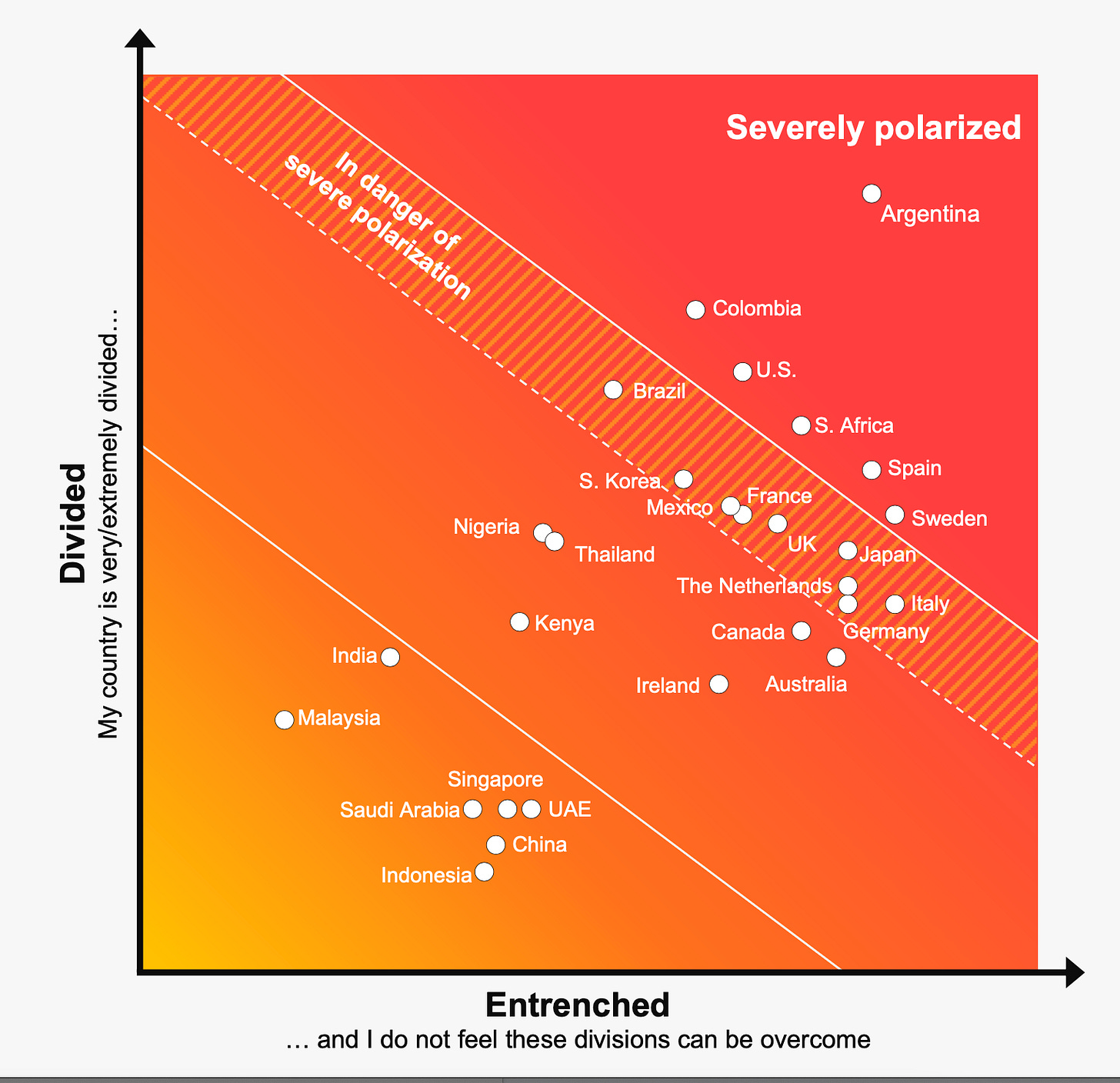

America ranked “severely polarized” alongside six other nations

The Edelman Trust Barometer is an annual survey of over 32,000 respondents to measure trust worldwide. In its 2023 report, America was ranked as “severely polarized,” a category given to only the six most polarized countries in the world. Argentina was ranked most polarized, followed by Colombia, The United States, South Africa, Spain, and Sweden.

Edelman also wanted to know what drives polarization, so they looked at correlations between polarization and other questions on the survey. They found six main determinants: a distrust in government, a lack of shared identity, systemic unfairness, economic pessimism, societal fears, and distrust in the media. The report notes that only 42% of people in the US actually trust their government, among the lowest of any country.

Quote of the Week

The hard truth about America today is that Red and Blue Americans need each other desperately. As outlined in a recent World Bank Group report, our nation is today facing multiple, overlapping and compounding global crises, which quite simply require all hands on deck.

– Pierce Goodwin & Peter Coleman

Super interesting! Just a small thing - what happened with the alignment of the 3 tables? Pretty much impossible to trace what is what. The source paper doesn't have this issue.. Love you article, happy to fix the diagrams for you if you care enough to replace them.

This is the second time I've seen the claim that LLMs "hallucinate". The word choice is interesting - they routinely create text that includes untruths, possibly at a rate approaching a human pathological liar. But they don't have senses, and so aren't capable of hallucination.

The first time I saw it, I thought an engineer was being tactful - and just a bit humorous - describing how they filtered a chatbot's recommendations to include only things that actually exist.

But your use of the same term suggests this is becoming the standard way to describe LLMs producing falsehoods, particularly falsehoods lacking any grounding in reality. (I.e. they didn't find the false statement in their training data.) I'm curious where the term came from.